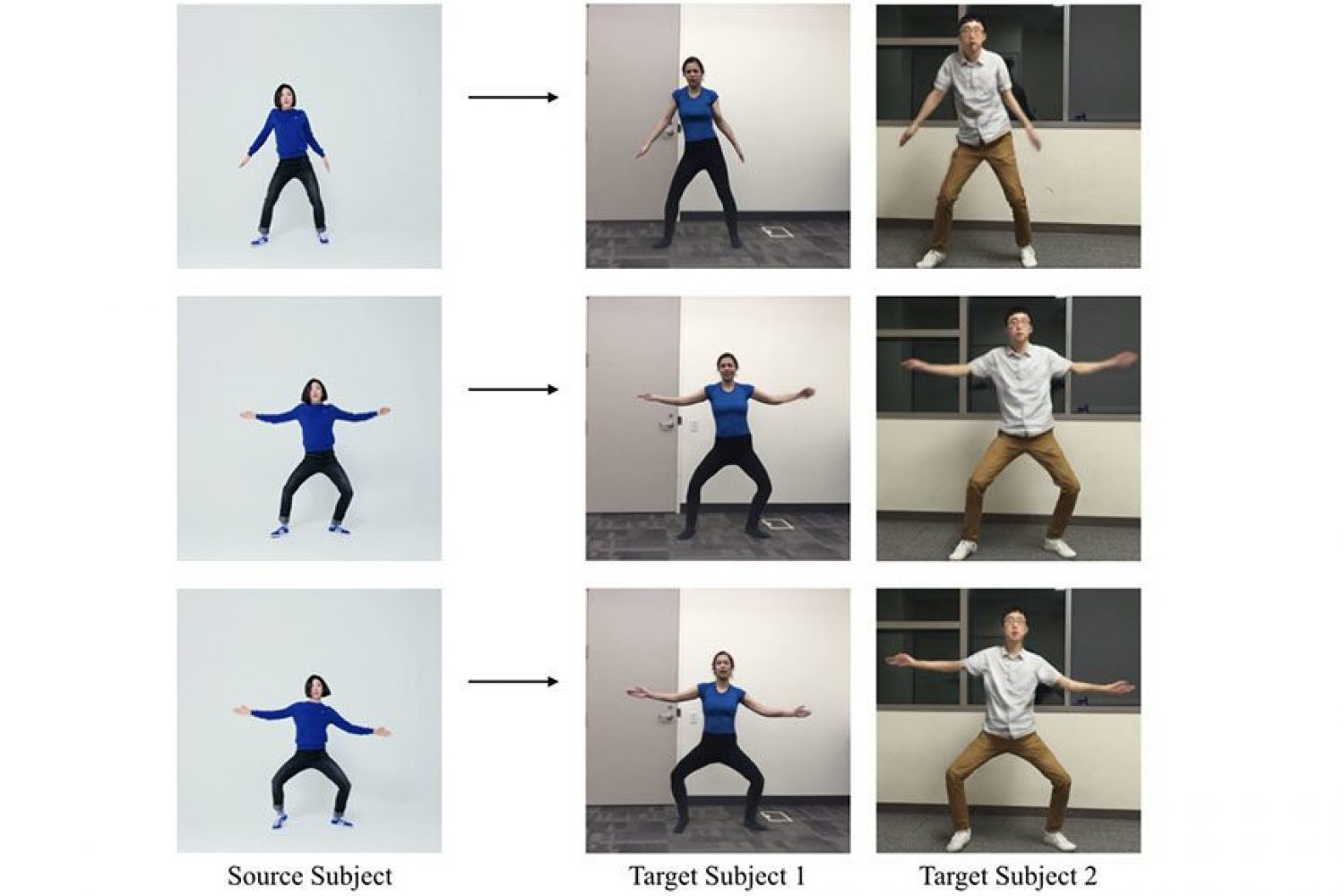

This paper presents a simple method for „do as I do“ motion transfer: given a source video of a person dancing we can transfer that performance to a novel (amateur) target after only a few minutes of the target subject performing standard moves. We pose this problem as a per-frame image-to-image translation with spatio-temporal smoothing. Using pose detections as an intermediate representation between source and target, we learn a mapping from pose images to a target subject’s appearance. We adapt this setup for temporally coherent video generation including realistic face synthesis.

Everybody Dance Now – Motion Transfer Paper by Caroline Chan, Shiry Ginosar, Tinghui Zhou, Alexei A. Efros